To Quantify Or Not to Quantify? Foreign Policy Needs Measurement

By: Toby Weed | October 2, 2023

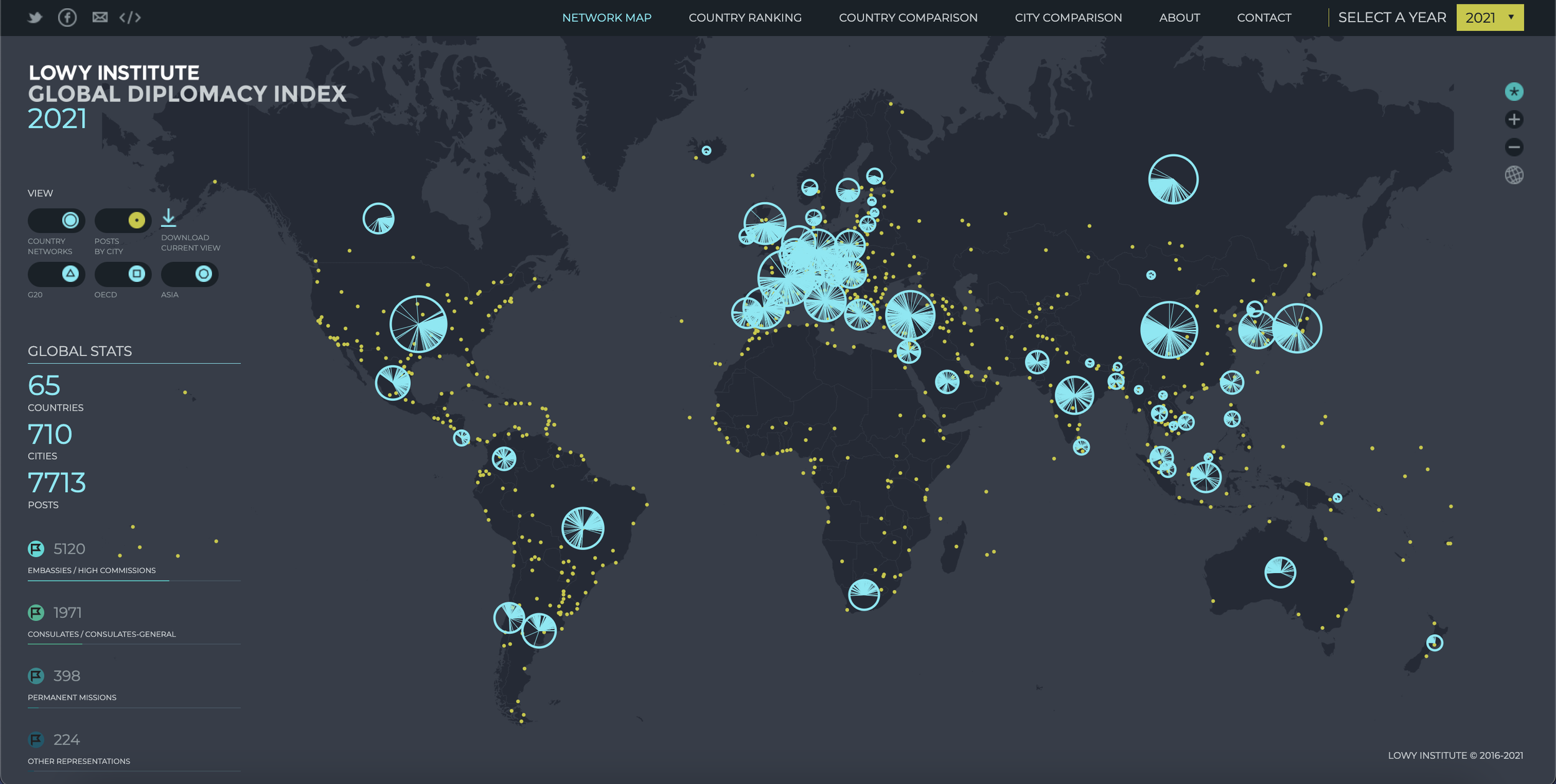

The 2021 Lowy Institute Global Diplomacy Index visualises the diplomatic networks of 65 G20, OECD and Asian countries and territories.

I have a confession: I was trained as a mathematician. I chose to work at a foreign policy think tank because I believe that the issues in this domain are some of the most important in the world. Yet transitioning from work in primarily quantitative research settings to a historically qualitative one involved some shocks. The biggest is the ambient discomfort with – and in some cases, outward hostility to – the tools, products, and associates of mathematics. Mathematically-informed, scientific, evidence-based thinking has revolutionized society, starting with the physical sciences but extending to fields like business management (Hubbard, 2012), medicine, aviation, and sports (Sutherland, 2022), and international development (White, 2019). But it is 2023, and the U.S. foreign policy establishment still relies more or less exclusively on ad hoc intuitive analysis to make some of the most important decisions in the world. Diplomacy is late to the game.

My organization, fp21, advocates for an approach to policy in which the decision-making process is built around the pursuit of an accurate understanding of the world and the most-likely impact of policy interventions. Expressed thus, this is an uncontroversial stance. The friction arises in the way that we conceive of that pursuit. The U.S. foreign policy establishment does not appear to take a scientific approach to understanding the world or the likely consequences of its actions, and therefore policy decisions are not informed as well as they could be.

This article argues that the foreign policy establishment needs to follow the example set by other fields and incorporate quantitative measurement into its analytical and decision-making processes. By measuring the phenomena and concepts central to the work – using mathematical language to discuss them and accompanying that discussion with empirical observation – foreign policy practitioners can reduce their uncertainty and make better decisions.

Why is Quantification Controversial?

This piece advocates for the informed use of very simple mathematical formalism, such as numbers, in foreign policy conversations. It’s a simple habit, but also a powerful one. Doing so allows us to “show our work.” This is helpful for sharing perspectives among groups of people with different contextual understandings. It facilitates the identification of mistakes, biases, and weak assumptions. Numbers also allow for comparison between similar cases and encourage more precise feedback in order to learn over time. It’s that simple: we use numbers – and all mathematical tools, really – because they allow us to express subtle concepts precisely and explicitly.

Yet, the U.S. foreign policy establishment has an aversion to quantification and scientific thinking. It is not uncommon to hear high-level officials say things like “diplomacy is fuzzy... it's almost impossible to quantify what we do, and in fact I think that there's a great danger in trying to quantify it" (a direct quote from a former Ambassador at a public event). So we ask: why is quantification so controversial?

One common objection to the statement “diplomacy should be made more scientific” is “diplomacy can’t be made more scientific.” It is true that unlike in physics, there are no fundamental equations for foreign policy. But the purpose of quantitative analysis isn’t to subject policymakers to the Procrustean whims of some oversimplified equations. Instead, quantification is intended as one tool among many to help decision-makers see the world through clearer eyes.

Enumerating some of the legitimate challenges to the scientific study of foreign policy should help ground this conversation in the work at hand. One frequently raised issue is that “at a basic level, much of the work of diplomacy is unobservable to researchers, conducted behind closed doors or through classified cables” (Malis, 2021). In other words, there is a scarcity of good public data on foreign policy.

Even when good public data is available, it is often not useful for policymakers. For example, it is interesting to know that poverty contributes to political instability, but “poverty” is not a lever that policymakers can easily adjust with foreign policy. Many of the variables scholars carefully study and measure are not directly relevant to policymakers’ decisions, or fail to inform any actionable recommendations. The most policy-relevant scholarship focuses its attention on concepts and tools that are applicable to actual decision-making processes.

Another core challenge is that “the results of the work of foreign ministries are typically indirect, difficult to measure and not easy to influence directly,” and “whether an expected result is obtained does not depend completely on the actions of the foreign ministry” (Mathiason, 2007, p. 226). That is, it can be hard to determine causality in diplomacy. Did a leader withdraw their armed forces because of skilled negotiation, or was it due to the opposition’s resolve? Did a civil war end because of the efforts of third party mediators, or because the warring parties simply grew tired of the violence? Social science remains a relatively young and immature field which offers few, if any, ironclad laws of human behavior.

Many officials worry about the unintended consequences of measurement in foreign policy. The scholar Kishan Rana suggests that data-driven management “may misdirect diplomatic activity to the measurable forms and de-valorise the more subtle, long-term work of relationship building” (2004, p. 15). For instance, a 1983 joint resolution of Congress asked the State Department to produce a report counting how often other countries voted with the United States in the UN General Assembly. The goal was to nudge embassies to invest more in multilateral diplomacy, but embassies were observed “equally weighting ‘throw-away’ policies with real impactful U.S. policy goals” (Seymour, 2020). As the management guru Peter Drucker famously observed, “What gets measured gets managed – even when it’s pointless to measure and manage it, and even if it harms the purpose of the organization to do so.”

Skeptics have a point: the foreign policy arena really does present some legitimate challenges to traditional scientific methods. These challenges can seem intractable, leading many policy-minded experts to throw up their hands and say, “this domain just isn’t compatible with scientific rigor.” But this sentiment overlooks the many similar challenges which science routinely faces and overcomes in other domains.

It’s Approximate, It’s Subjective: It’s Just Vocabulary

Much of the discomfort with quantification seems to be rooted in misconceptions about what it means to measure something in the first place. One objection to the use of quantified measurement in a domain like foreign policy is that any measurement will necessarily be a simplified and therefore imperfect representation of reality. This argument has merit. But the same objection applies to non-mathematical language: nouns, verbs, and adjectives are imperfect symbolic representations which humans use to describe their experiences of an infinitely complex reality. Both forms of approximation have their strengths and weaknesses. This is not a reason to dismiss measurement out of hand. In fact, a quantified estimate often reduces ambiguity and clarifies uncertainty relative to alternatives.

A related objection is that quantification appears to connote a false sense of scientific objectivity. Indeed, there are many ways in which quantitative analysis can miss the mark set by reality. Objectivity is a problematic concept: in practice, every participant in every discourse is a subject. We have no access to anything which is not by definition subjective, and the pretense of perfect objectivity is a barrier to open scientific dialogue. But, perhaps contrary to popular belief, scientists who advocate for the use of numbers and mathematical models know this as well as anyone. To apply mathematics to a real-world problem does not require one to pretend they have perfect access to the objective truth of the matter. When one expresses something observable as a number, one is not suggesting they know that thing completely, nor that that thing literally is that number. One is merely expressing their ideas more explicitly, precisely, and reproducibly than they otherwise could.

For instance, how does one measure the occurrence of civil war? A common qualitative definition of war might be “a violent conflict between the government and a non-state actor.” Each word in the definition contains enormous ambiguity that might be clarified through a process of quantification. How many violent deaths must occur to rise to the level of a war? What qualifies as a non state actor? Did the American civil rights movement count as a civil war? Does a police effort to uproot a drug gang count as a civil war? Is it a civil war if only one side uses violence?

The Uppsala Conflict Data Program (UCDP) visualizes state-based armed conflicts by region, 1989-2022

There are no “correct” answers to these questions, but quantification requires one to make choices explicitly and transparently. It draws attention to fringe cases. Once quantified, one can then more easily answer questions about what factors tend to cause civil wars, how to prevent them, and how to end them. If the underlying data is messy or misleading, that is not a reason to avoid quantification in the first place, it’s an opportunity to present improved definitions or collect better data.

Thus, avoiding quantification does not demonstrate the prudent restraint of the seasoned diplomat in the face of overzealous mathematical chauvinism. Quantification is merely a tool. Avoiding it unnecessarily curtails one’s ability to precisely formulate their beliefs about the world and how to shape it.

How to Use Numbers Effectively

Mathematics does not require one to presume perfect objectivity or godlike omniscience. In fact, the opposite is true: mathematics offers some of the very best tools for handling uncertainty. My favorite definition of measurement, for instance, is “a quantitatively expressed reduction of uncertainty based on one or more observations” (Hubbard, 2010, p. 23).

Measurement is best accompanied by a clear explanation of its intended purpose. For policymakers, that purpose should usually be to support a vital analytical question or pending decision. For instance, when considering how to support Ukraine in the face of the Russian invasion, one might want to know the costs to Russia’s economy, and how much economic pain the Kremlin can bear. While precise answers are not possible, one might devise ways to create reasonable estimates that will reduce policymaker uncertainty.

The first step to measuring something is thus to identify which decision the measurement is intended to support.

‘If people ask “How do we measure X?” they may already be putting the cart before the horse. The first question is “What is your dilemma?” Then we can define all of the variables relevant to the dilemma and determine what we really mean by ambiguous ideas like “training quality” or “economic opportunity.”’ (Hubbard, 2010, p. 41).

Quantifying with purpose is a critical step in managing the challenges we listed previously. It helps us make the right assumptions, navigate tradeoffs, and target the right observables.

The next step is to consider how to communicate the inherent uncertainty in your measurement. Measurement is vital to intelligence tradecraft. Research shows that different people interpret words expressing likelihoods very differently. When you say that something “might” happen, two people might walk away from that conversation with two very different beliefs about how likely you believe that event to be. This phenomenon troubled Sherman Kent, who has been described as “the father of intelligence analysis,” way back in 1951. Ultimately, it led the intelligence community to adopt Analytic Standards which include, among other things, a clearly identified numerical scale of probability (see figure below). When an intelligence analyst says something is “very unlikely” this translates to a 5 to 20% chance of occurence. Unfortunately, the foreign policy community has not yet followed suit. In fact, the intelligence community continues to avoid explicitly using numeric expressions of probability (rather than the verbal equivalents defined by the analytic standards linked above) because they know that policymakers do not understand them.

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| almost no chance | very unlikely | unlikely | roughly even chance | likely | very likely | almost certain(ly) |

| remote | highly improbable | improbable (improbably) | roughly even odds | probable (probably) | highly probable | nearly certain |

| 01 - 05% | 05 - 20% | 20 - 45% | 45 - 55% | 55 - 80% | 80 - 95% | 95 - 99% |

The institutions of foreign policy would thus do well to integrate some competence with probability and statistics into every level of decision making. As an example, one tool is a statistical method known as Bayesian inference. I won’t present the ideas mathematically, but the idea is basically that propositional beliefs are best held as probabilities, and these probabilities should be updated (according to certain rules) whenever new information is received. These ideas have become mainstream through popular science books such as Superforecasting and The Signal and the Noise.

In The Signal and The Noise, Nate Silver offers an example of Bayesian thinking. An observer on September 10th, 2001 might, if prompted, assign a very low chance of terrorists crashing planes into the world trade center, say 1 in 20,000, or 0.005%. They might also say that the chances of planes accidentally crashing into the twin towers is very low. Looking at the historical incidence of such aviation accidents in New York would give a “base rate” of 1:12,500, or 0.008% on any given day. However, after the first plane crashed into the World Trade Center on 9/11, given these three pieces of information and using Bayes’ Theorem, the observer would revise their estimate of an ongoing terror attack up to 38% (Silver, 2012, p. 247) – not a certain bet, but probably better than any competing explanation at that moment in time. “Successful gamblers,” Silver explains, “think of the future as speckles of probability, flickering upwards and downward like a stock market ticker to every new jolt of information” (Ibid., p. 237). The utility of this style of thinking extends beyond literal, direct applications of Bayes’ Theorem. Probabilistic estimates, with all of their imperfections and uncertainties, are salient to actual policy decisions, and should be sought whenever possible. If high-level policymakers understood the basic principles of probability, they would be able to make more informed use of probabilistic estimates and forecasts. This alone could empower the foreign policy establishment to act with a great deal more precision, sophistication, and clarity.

Decades of research on psychology demonstrate that humans tend to be overconfident in their beliefs (Kahneman, 2011). The thoughtful use of numbers, especially probabilistic estimates and measures of uncertainty, is a way to come to terms with, and eventually to calibrate, one’s own confidence. We live in a complex world. The most effective decision maker in such a world should demonstrate humility, but also be willing to formulate a coherent set of beliefs. They must state those beliefs clearly while remaining open to alternative explanations. Extensive evidence demonstrates that quantitative measurement, well used, facilitates this process and reduce cognitive biases (e.g. Friedman et al, 2018).

Conclusion

By measuring the phenomena and concepts in foreign policy–using mathematical language to discuss them and accompanying that discussion with empirical observation– practitioners can reduce their uncertainty and make better decisions. Despite the fact that there are proven ways of measuring important phenomena in foreign policy, the foreign policy establishment has for too long been resistant to doing so. The complexity of international relations means that U.S. diplomacy needs more, not less, quantitative sophistication than other disciplines. Working to improve its mathematical literacy is the first step U.S. diplomacy can and must take to benefit from the paradigmatic changes which have swept through technology, business, and science. If it fails to take advantage of these changes, U.S. foreign policy will be buried by them. Neither the United States nor the world can afford that outcome.

Ultimately, fp21 cares about crafting more effective diplomatic institutions. But to improve as a foreign policy community, we need to be able to tell up from down. We need to be able to find evidence in the real world about what works well and what doesn’t. In other words, we need to make measurements.

Bibliography

Blattman, C. (2022). Why We Fight: The Roots of War and the Paths to Peace. Viking.

Brignall, S., & Modell, S. (2000). An institutional perspective on performance measurement and management in the ‘new public sector.’ Management Accounting Research, 11(3), 281–306. https://doi.org/10.1006/mare.2000.0136

Cultivate Labs | Collective intelligence solutions using crowd forecasting, prediction markets and enterprise crowdfunding. (n.d.). Retrieved August 13, 2023, from https://www.cultivatelabs.com/crowdsourced-forecasting-guide/what-is-forecast-calibration

DOS Learning Agenda. (n.d.). Retrieved July 4, 2023, from https://www.state.gov/wp-content/uploads/2022/06/Department-of-State-Learning-Agenda-2022-2026-2.pdf

Evaluation and Measurement Unit—Office of Public Diplomacy. (n.d.). Retrieved July 1, 2023, from https://2009-2017.state.gov/r/ppr/emu/index.htm

Evidence-Based Policymaking: A Guide for Effective Government. (n.d.).

Friedman, J, Joshua D Baker, Barbara A Mellers, Philip E Tetlock, Richard Zeckhauser, The Value of Precision in Probability Assessment: Evidence from a Large-Scale Geopolitical Forecasting Tournament, International Studies Quarterly, Volume 62, Issue 2, June 2018, Pages 410–422

Foong Khong, Y. (1992). Analogies at War. https://press.princeton.edu/books/paperback/9780691025353/analogies-at-war

Global Diplomacy Index—About. (n.d.). Https://Globaldiplomacyindex.Lowyinstitute.Org/Lowyinstitute.Org/. Retrieved July 7, 2023, from https://globaldiplomacyindex.lowyinstitute.org

Guarini, K. (2021, June 6). You Get What You Measure (So Be Careful What You Measure). Mother of Invention. https://mother-of-invention.net/2021/06/06/you-get-what-you-measure-so-be-careful-what-you-measure/

Hicks, J. (2021). Defining and Measuring Diplomatic Influence. Institute of Development Studies (IDS). https://doi.org/10.19088/K4D.2021.032

Hubbard, D. W. (2010). How to measure anything: Finding the value of “intangibles” in business (2nd ed). Wiley.

Institute, L. (n.d.). Diplomatic influence data—Lowy Institute Asia Power Index. Lowy Institute Asia Power Index 2023. Retrieved July 7, 2023, from https://power.lowyinstitute.org/data/diplomatic-influence/diplomatic-network/

Kahneman, D. (2011). Thinking, Fast and Slow. Macmillan.

Labaree, R. V. (n.d.). Global Measurements and Indexes [Research Guide]. Retrieved July 1, 2023, from https://libguides.usc.edu/IR/GlobalIndexes

Lichtenstein, S., & Fischhoff, B. (n.d.). Calibration of Probabilities: The State of the Art to 1980.

Malis, M. (2021). Conflict, Cooperation, and Delegated Diplomacy. International Organization, 75(4), 1018–1057. https://doi.org/10.1017/S0020818321000102

Malis, M., & Smith, A. (2021). State Visits and Leader Survival. American Journal of Political Science, 65(1), 241–256. https://doi.org/10.1111/ajps.12520

Malis, M., & Thrall, C. (n.d.). The Bureaucratic Origins of International Law: A Pre-Analysis Plan. Dropbox. Retrieved May 24, 2023, from https://www.dropbox.com/s/ufot9f5gg97cr3f/Thrall%20%26%20Malis%20-%20Bureaucratic%20Origins%20-%20NCGG%20Workshop.pdf?dl=0

Mathiason, J. (2007). Linking Diplomatic Performance Assessment to International Results-Based Management. FOREIGN MINISTRIES. https://www.diplomacy.edu/wp-content/uploads/2011/12/Foreign-Ministries-WEB.pdf

Mauboussin, A., & Mauboussin, M. J. (2018, July 3). If You Say Something Is “Likely,” How Likely Do People Think It Is? Harvard Business Review. https://hbr.org/2018/07/if-you-say-something-is-likely-how-likely-do-people-think-it-is

McAfee, A., & Brynjolfsson, E. (2012, October 1). Big Data: The Management Revolution. Harvard Business Review. https://hbr.org/2012/10/big-data-the-management-revolution

Pahlavi, P. (2007). Evaluating Public Diplomacy Programmes. The Hague Journal of Diplomacy, 2(3), 255–281. https://doi.org/10.1163/187119007X240523

Pearl, J., & Mackenzie, D. (2018). The Book of Why: The New Science of Cause and Effect. Penguin Books Limited.

Piper, K. (2020, October 14). Science has been in a “replication crisis” for a decade. Have we learned anything? Vox. https://www.vox.com/future-perfect/21504366/science-replication-crisis-peer-review-statistics

Portland. (n.d.). Soft Power 30. Soft Power. Retrieved June 8, 2023, from https://softpower30.com/

Rana, K. (2004). Performance Management in Foreign Ministries. Netherlands Institute of International Relations “Clingendael,” Discussion papers in diplomacy(93).

Reiss, J., & Sprenger, J. (2020). Scientific Objectivity. In E. N. Zalta (Ed.), The Stanford Encyclopedia of Philosophy (Winter 2020). Metaphysics Research Lab, Stanford University. https://plato.stanford.edu/archives/win2020/entries/scientific-objectivity/

Rose, A. K. (2016). Like Me, Buy Me: The Effect of Soft Power on Exports. Economics & Politics, 28(2), 216–232. https://doi.org/10.1111/ecpo.12077

Seymour, M. (2020, December 14). Measuring Soft Power—Foreign Policy Research Institute. https://www.fpri.org/article/2020/12/measuring-soft-power/

Silver, N. (2012). The Signal and the Noise: Why So Many Predictions Fail--but Some Don’t. Penguin Press.

Spokojny, D., & Scherer, T. L. (2021, July 26). Foreign Policy Should Be Evidence-Based. War on the Rocks. https://warontherocks.com/2021/07/foreign-policy-should-be-evidence-based/

Sutherland, W. J. (2022). 1. Introduction: The Evidence Crisis and the Evidence Revolution. 3–28. https://doi.org/10.11647/obp.0321.01

Tetlock, P. E. (n.d.). Superforecasting.

Tversky, A., & Kahneman, D. (1974). Judgment under Uncertainty: Heuristics and Biases. Science, 185(4157), 1124–1131. https://doi.org/10.1126/science.185.4157.1124

White, H. (2019). The twenty-first century experimenting society: The four waves of the evidence revolution. Palgrave Communications, 5(1), Article 1. https://doi.org/10.1057/s41599-019-0253-6

Words of Estimative Probability. (n.d.).